Optimization adventures: making a parallel Rust workload even faster with data-oriented design (and other tricks)

This post is the second part of my adventures to optimize a Rust workload running on multiple threads.

In the first post, I explored how the rayon parallelism framework works, and explained how I designed a faster replacement for my use case.

However, the parallelism implementation was not the lowest-hanging fruit to optimize my program. In this post, I’ll present the other optimizations that the profiling tools led me to: compiler flags, inlining, fine-tuning data structures layout, re-writing arithmetic formulas, sorting and copying data.

Wait, what? Copying data, really?! Isn’t the whole point of fighting the Rust borrow checker that you unlock super-optimized zero-copy data structures?

If you’re confused about how copying could possibly be good for performance, read on to learn why!

- Inlining! (30% faster)

- Writing better arithmetic (up to 7x faster)

- Optimizing

BigInts (12% to 25% faster) - Data-oriented design (20% faster)

- Sorting and cloning data (20% faster)

- Optimizing

BigRationals (15x to 20x faster) - Generic optimizations

- Results

Inlining! (30% faster)

This is the optimization that I didn’t expect to have to manually do, but which turned out to be very effective. As pointed out by Matklad’s post Can You Trust a Compiler to Optimize Your Code?, inlining is really the root of all optimizations, because most other optimizations are local to each function.

To be more concrete, my code contained a newtype for fixed-point arithmetic, which manipulates integers that represent fixed-precision decimal numbers. A simplified view is the following, for a type that handles 9 decimal places.

/// A fixed-point decimal arithmetic for 9 decimal places. This type represents

/// a number `x` by the integer `x * 10^9`, backed by a [`i64`].

pub struct FixedDecimal9(i64);

impl FixedDecimal9 {

fn one() -> Self {

FixedDecimal9(1_000_000_000)

}

// Addition.

fn add(self, rhs: Self) -> Self {

FixedDecimal9(self.0 + rhs.0)

}

// Multiplication.

fn mul(self, rhs: Self) -> Self {

FixedDecimal9(((self.0 as i128 * rhs.0 as i128) / 1_000_000_000) as i64)

}

}

Importantly, my program spends most of its time doing arithmetic (either with this type or with another backend), and as you can see each function has a fairly simple arithmetic logic. As such, I expected the compiler to do the right thing in release mode and inline all of these small functions – otherwise the performance penalty can be significant. But it wasn’t the case, somehow the compiler didn’t deem worthwhile to inline these functions by default!

In the end, adding #[inline(always)] to all of these functions remediated the issue and decreased the runtime by around 30%.

Writing better arithmetic (up to 7x faster)

In the same spirit, some arithmetic formulas were not optimal.

When multiplying fixed-precision numbers backed by i64, I promoted them to i128 to avoid overflow during the multiplication, casting back to an i64 only at the end (or panicking if the result wouldn’t fit).

It turns out that unconditionally operating on 128-bit integers (on a 64-bit machine) isn’t well optimized by Rust1.

Instead, trying with checked_mul() first to see if the multiplication would fit in i64 and only falling back to i128 in case of overflow is more efficient.

This small change decreased the runtime by another 30%.

// Before

fn mul(self, rhs: Self) -> Self {

FixedDecimal9(((self.0 as i128 * rhs.0 as i128) / 1_000_000_000) as i64)

}

// After

fn mul(self, rhs: Self) -> Self {

FixedDecimal9(match self.0.checked_mul(rhs.0) {

// Fast path: the intermediate product fits in i64.

Some(product) => product / 1_000_000_000,

// The intermediate product doesn't fit in i64, fall back to i128.

None => ((self.0 as i128 * rhs.0 as i128) / 1_000_000_000) as i64,

})

}

Likewise, my multiplication implementation was not optimized with a BigInt backend.

I initially wrote a naive and very inefficient implementation: create a BigRational, round it down to an integer and take the numerator.

As it turns out, BigInt directly supports division by an integer, and it is much faster than the convoluted operations I was doing.

This change divided the total runtime by a factor seven!

// Before

fn mul(self, rhs: Self) -> Self {

let ratio = BigRational::new(self.0 * rhs.0, BigInt::from(1_000_000_000));

BigFixedDecimal9(ratio.floor().numer().clone())

}

// After

fn mul(self, rhs: Self) -> Self {

BigFixedDecimal9((self.0 * rhs.0) / 1_000_000_000)

}

The hard part about these optimizations is that they were hiding in plain sight in the profiler output. I could see some arithmetic functions taking a lot of runtime, but I didn’t pay attention to them at first, because I precisely expected my program to spend most of its time computing these expressions.

Optimizing BigInts (12% to 25% faster)

The BigInt type can represent arbitrarily large integers, which is important to prevent the election counting from failing due to integer overflow(s) (or worse, returning an incorrect result if arithmetic overflows aren’t caught).

In practice, a big integer is represented as a Vec<BigDigit>, where the digit type is chosen depending on the CPU architecture (typically u64 on 64-bit systems).

The problem with this representation is that a Vec incurs additional overhead due to a heap allocation and pointer indirection to access the data.

If you account that each arithmetic operation creates a new object, the overhead quickly adds up.

And this overhead exists even if the integers are in fact small (i.e. would fit in one u64).

To counter that, a common optimization known as the “small vec optimization” and implemented by crates2 like smallvec consists in storing the data inline rather than on the heap for vectors up to a certain length.

In particular, a Vec normally consists of 3 usize fields inline: a length, a capacity and a pointer to the data.

The trick is to change the memory layout if the length is at most 2: in that case, we store two values inline where the capacity and the pointer would normally be, and have nothing on the heap.

The

smallveccrate requires you to configure the size of this inline storage. For example, if you store bytes, an inline storage of[u8; 16]naturally takes the same space as the capacity and pointer (on a 64-bit system). But you can also make the inline storage larger if you only want to resort to heap allocations for larger arrays.

There was already a pending pull request to use a smallvec representation in the num-bigint crate, so I rebased it on top of the last release and tried it.

In practice, this meant creating a new GitHub repository with a branch named feature-small-uint, and patching the dependency in the Cargo.toml file of my main project as follows.

[patch.crates-io]

num-bigint = { git = "https://github.com/gendx/num-bigint.git", branch = "feature-small-uint" }

As documented here, you can do this kind of patching even for transitive (indirect) dependencies. The only constraint is that the version (as indicated in the

Cargo.tomlfile on the patch branch) matches the one resolved by Cargo, otherwise it will fail to compile.

This optimized data layout reduced the runtime by 12% to 25%. Interestingly, the larger relative gain was when using more threads. Perhaps because lots of small allocations from many threads creates contention on the global allocator?

Data-oriented design (20% faster)

Around the time I started this research, I stumbled upon two announcements highlighting huge performance improvements in crates to (de)compress PNG images.

Digging deeper, this blog post caught my eye, mentioning keeping data in the hot loop within the L1 cache of (only!) 32 KB.

This was before I ran lscpu and realized how small the caches are.

Needless to say, my input data was definitely not fitting in 32 KB.

In the election system that my program counts, the input consists of ballots where voters rank candidates, for example:

1. Alice, Bob, Charlie

2. David

3. Eva

Given that candidates can be ranked equally in a ballot (here Alice, Bob and Charlie are all ranked first), a reasonable but naive representation is to use nested Vecs, with each candidate represented by an index.

Above that, an election input is a long sequence of ballots, which I’m iterating over in parallel.

struct Ballot {

/// Ranking of candidates in the ballot.

order: Vec<Vec<usize>>,

}

The problem is that even though nested Vecs are very convenient to use in Rust, their memory representation is quite inefficient.

For example the ballot I showed above with 5 people ranked in 3 groups is represented by no less than 216 bytes in memory (on my 64-bit system).

vec![vec![1,2,3], vec![4], vec![5]]. 216 bytes!

As you can see, the Vec<Vec<usize>> is very bloated and contains several levels of indirection, which goes against the principles of data-oriented design of being as cache-friendly as possible.

- Each

Vecallocates extra slots to amortize the cost of potentially growing it later, but this means unused slots in the heap allocation and an extrausizeto keep track of the capacity separately from the length. All of this extra growing capacity is useless after parsing the input file, as the data structure becomes immutable. - Each index to represent a candidate uses 64 bits, even though in practice one byte would be enough (unless you run an election with more than 256 candidates, which isn’t very practical).

As a result, on my benchmark input with 1000 ballots, the deserialized election expanded to 776 KB.

This definitely doesn’t fit in L1 cache and is 5.7x larger than the input in text format of 137 KB!

And indeed, perf stat confirmed a lot of cache misses.

To make the ballots smaller and more cache-friendly, I explored several ideas: representing each candidate by a u8 index, applying the smallvec technique once again, using Box<[_]> instead of Vec<_> to remove the capacity field.

Lastly, I tried flattening the 2D structure, by storing a list of candidates paired with a list of positions where to “cut” the first list.

After benchmarking the options, the following flattened data structure won, using Box<[u8]> to represent each list.

This reduced the runtime by up to 20% in the best scenario.

[[1, 2, 3], [4], [5]]. 48 bytes.

This optimized data layout was especially successful under the following conditions.

- There is a lot of data to process (i.e. the entire ballot array doesn’t fit in cache). This is nice because a 20% improvement generally matters more for a task that takes seconds or minutes (processing lots of data) than for a task that takes milliseconds.

- There is little to compute for each piece of data, i.e. the program is data-bound rather than compute-bound. This compounds with all the arithmetic optimizations that made the hot loop faster.

I also tried to push data-oriented design further with bit-packing. With my example ballot, we can store each candidate index using 3 bits, plus an additional bit to indicate whether to split or not: all the information can fit into 3 bytes.

[[1, 2, 3], [4], [5]]. Only 3 bytes but slower.

With this extreme packing, perf stat confirmed that data cache misses decreased, but the program ran at least 30% slower, because of all the additional code to unpack the bit stream in the hot loop.

Additionally, rewriting my code to work with a bit-packed stream required a lot of refactoring, introducing complex Rust traits (hello GATs) to make the interface agnostic from implementation details and compatible with Rayon’s custom iteration traits.

This shows the limits of data-oriented design: by over-optimizing for one metric (data cache misses), you risk that other important metrics (instruction count, complexity of the source code) get worse.

Sorting and cloning data (20% faster)

After all of this, I was still looking for small optimization opportunities.

One thing I noticed was that an input file with ballots in sorted order was faster to process than a file with the same ballots in random order.

The cause can be spotted with perf stat: the sorted input causes twice fewer branch misses.

As a result, even though the number of instructions is very similar, they are executed in 16% fewer CPU cycles.

$ perf stat -d ./target/release/stv-rs --arithmetic fixed9 --input testdata/ballots/random/rand_hypergeometric_100k.blt meek --parallel=custom --num-threads=4 > /dev/null

19368.45 msec task-clock # 3.615 CPUs utilized

...

>>> 46756113989 cycles # 2.414 GHz (62.56%)

92823656008 instructions # 1.99 insn per cycle (75.06%)

17476981475 branches # 902.343 M/sec (74.99%)

>>> 498292295 branch-misses # 2.85% of all branches (74.92%)

25170184655 L1-dcache-loads # 1.300 G/sec (74.96%)

63703636 L1-dcache-load-misses # 0.25% of all L1-dcache accesses (75.06%)

3944145 LLC-loads # 203.638 K/sec (50.05%)

782226 LLC-load-misses # 19.83% of all LL-cache accesses (50.07%)

5.357838585 seconds time elapsed

$ perf stat -d ./target/release/stv-rs --arithmetic fixed9 --input testdata/shuffle_ballots/rand_100k_sorted_lexicographically.blt meek --parallel=custom --num-threads=4 > /dev/null

16413.20 msec task-clock # 3.543 CPUs utilized

...

>>> 39002811767 cycles # 2.376 GHz (62.40%)

92870675228 instructions # 2.38 insn per cycle (74.90%)

17491348340 branches # 1.066 G/sec (74.89%)

>>> 233168949 branch-misses # 1.33% of all branches (75.05%)

25158146743 L1-dcache-loads # 1.533 G/sec (75.08%)

65509824 L1-dcache-load-misses # 0.26% of all L1-dcache accesses (75.06%)

4692134 LLC-loads # 285.876 K/sec (50.03%)

747867 LLC-load-misses # 15.94% of all LL-cache accesses (49.91%)

4.632845305 seconds time elapsed

The underlying reason is simple: when consecutive ballots are “similar”, the CPU’s branch predictor can learn common patterns. In random order, there is no predictible structure, so the branch predictor makes more mistakes.

So, let’s sort the items after parsing the file, and we’ll get a speed-up right?

Unfortunately, that didn’t improve the speed as much as expected…

Fortunately, perf stat once again explained why: we’ve increased data cache misses – twice more L1 cache misses, and 14 times more L3 cache misses!

$ perf stat -d ./target/release/stv-rs --arithmetic fixed9 --input testdata/ballots/random/rand_hypergeometric_100k.blt meek --parallel=custom --num-threads=4 > /dev/null

18142.75 msec task-clock # 3.569 CPUs utilized

...

43042003486 cycles # 2.372 GHz (62.55%)

93348925387 instructions # 2.17 insn per cycle (74.95%)

17594108135 branches # 969.760 M/sec (75.07%)

242267145 branch-misses # 1.38% of all branches (75.00%)

25231903499 L1-dcache-loads # 1.391 G/sec (74.84%)

>>> 126872723 L1-dcache-load-misses # 0.50% of all L1-dcache accesses (74.93%)

>>> 42265583 LLC-loads # 2.330 M/sec (50.09%)

>>> 10657891 LLC-load-misses # 25.22% of all LL-cache accesses (50.09%)

5.084104818 seconds time elapsed

The underlying reason is that as we’ve seen each item points to allocated data structures. When we parse the input file, we ask the allocator for some chunks to allocate, and because we’re not deallocating anything, chances are that the allocator gives us chunks in order. This is good later on when we iterate over items in order, as the CPU prefetcher can guess where to fetch the next allocated chunk.

However, if we sort the input afterwards, we’re only moving the “root” data structures, not the allocated chunks. So by making our items logically sorted, we’re in fact randomizing the addresses of the allocated chunks! And in terms of data-oriented design, random access is the worst access pattern.

What we really want is to also sort the allocated objects. And an easy way to do that – you might have guessed from the title – is to clone the input! Indeed, the allocator is likely to give us new chunks sequentially in memory.

By paying the cost of cloning the input once after sorting it, we make all the sequential reads much faster afterwards.

This is an exception to Clippy’s assigning_clones lint where performance did increase by cloning things.

// This block intentionally clones the ballots into themselves to obtain a more

// efficient memory layout, which conflicts with this lint.

#[allow(clippy::assigning_clones)]

fn optimize_layout() {

ballots.sort_by(/* ... */);

ballots = ballots.clone();

}

The improvement is confirmed with perf stat: neither too many branch misses nor too many cache misses.

$ perf stat -d ./target/release/stv-rs --arithmetic fixed9 --input testdata/ballots/random/rand_hypergeometric_100k.blt meek --parallel=custom --num-threads=4 > /dev/null

16227.23 msec task-clock # 3.511 CPUs utilized

...

38828636272 cycles # 2.393 GHz (62.37%)

93401722600 instructions # 2.41 insn per cycle (74.84%)

17606891459 branches # 1.085 G/sec (74.91%)

231553556 branch-misses # 1.32% of all branches (74.94%)

25256845386 L1-dcache-loads # 1.556 G/sec (75.05%)

64909244 L1-dcache-load-misses # 0.26% of all L1-dcache accesses (75.26%)

5856429 LLC-loads # 360.901 K/sec (50.04%)

875500 LLC-load-misses # 14.95% of all LL-cache accesses (49.82%)

4.622040054 seconds time elapsed

In the best scenario (a large input that doesn’t fit in cache) sorting gave a 10% speedup, followed by another 10% by cloning the data. To learn more about memory fragmentation effects, I recommend fasterthanlime’s excellent video Why does this Rust program leak memory?.

And we’ve now committed one of the worst crimes in software engineering: depending on an implicit behavior of a dependency – that the allocator will pick sequential chunks when we clone the sorted data.

To productionize this, you probably want to use a custom allocation tool such as an arena instead, so that you can precisely control the behavior (and keep the assigning_clones lint happy).

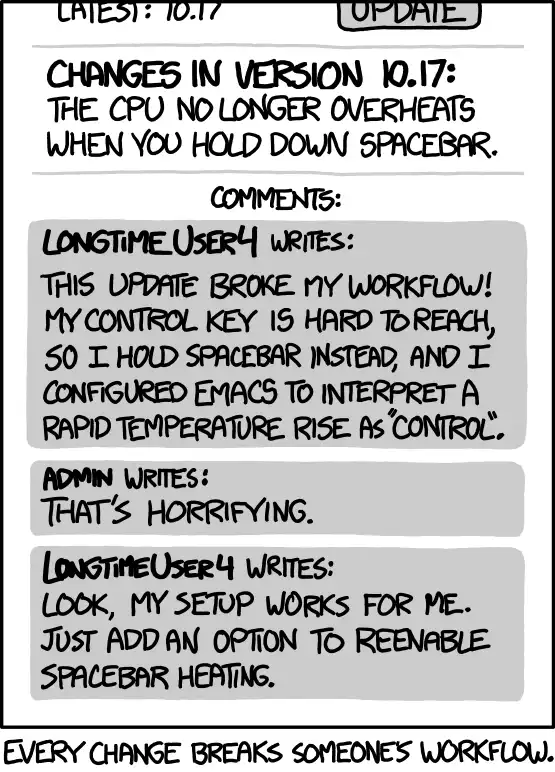

Credit: xkcd 1172 (CC BY-NC 2.5).

See also Hyrum’s Law.

Credit: xkcd 1172 (CC BY-NC 2.5).

See also Hyrum’s Law.

Optimizing BigRationals (15x to 20x faster)

This section is a bit more mathematical, but led to such a large improvement that I cannot exclude it from this post. Feel free to skip to the next section if the formulas get too technical.

Another back-end for my implementation is the BigRational type, which represents rational numbers exactly with arbitrary precision, by storing the numerator and denominator as BigInts.

This is good to compute exact results, but comes with a large performance cost.

One reason is that a BigRational stores each fraction in reduced form, i.e. preserving the invariant that .

For example, when calculating , a naive algorithm would return , but this can be reduced to .

Automatic reduction is a good default to keep the underlying integers small and under control when chaining multiple operations and therefore avoid algorithmic complexity explosion3. But the cost is that each arithmetic operation is expensive due to GCD/LCM calculations to keep the result reduced.

For example, a multiplication of two rational numbers is reduced as follows by the num-rational crate:

The formula is more complex for additions/subtractions, that are reduced as:

Quite a bit of computation for a simple addition!

Besides, each GCD/LCM involves multiple divisions, multiplications and subtractions on big integers.

As a result, my previous benchmarks showed that rational arithmetic was 3 times slower than the already non-optimal big-integer arithmetic, and overall 1000 times slower than floating-point arithmetic.

A quick look with perf record confirmed that indeed 86% of the runtime was spent on these GCD/LCM reductions.

While this behavior of BigRational is a good default, we can optimize much more based on the knowledge of the mathematical formula that we want to compute.

At a high level, the hot loop of my program computes a score for each candidate which is a sum of products, based on the weights .

For example, a score may look like this:

In this formula, each weight is rounded to some decimal precision (typically 6), and therefore can be written as where is an integer. This means that the final denominator will be of the form , so we can rewrite the formula as a sum of products of the integers , followed by only one division at the end. Using (big) integer arithmetic to compute this numerator avoids all the intermediate GCD reductions and is much faster.

This is a slightly simplified view though: in reality each term in the sum can be split, by dividing it by a small integer (at most the total number of candidates in the election).

So I still needed some rational arithmetic.

But rather than the naive/general implementation of BigRational, we can use a custom representation.

I chose to represent each denominator as its prime factorization , where are the (constant) prime numbers and the exponents describe the number.

Because the denominators are small, the exponents can generally be stored in a relatively small array (e.g. [u8; 24] if we only use the first 24 prime numbers and the exponents are all smaller than 256).

With this representation, we can perform partial GCD/LCM reductions for each multiplication/addition based on very fast formulas that only involve coefficient-wise minimum/maximum. A final full reduction is computed at the end when the sum is complete.

As a concrete example, calculating the sum gives , which isn’t fully reduced, but still better than the naive .

The implementation required quite a bit of refactoring (with an Easter egg to a previous post), but was also by far the most effective of this journey, making the program 14 to 18 times faster.

This compounds with the smallvec optimization on the underlying BigInt: overall, rational arithmetic became 15 to 20 times faster!

Generic optimizations

To finish this section, let me review some generally applicable optimizations, and whether they worked for me or not. The Rust Performance Book by Nicholas Nethercote provides a checklist, and I’ll mention some additional techniques.

Log filtering (up to 2x faster)

To help debug my code, I used the log crate during development, combined with env_logger to control logging via an environment variable.

This means that log statements are always compiled, but only executed at runtime depending on the value of the RUST_LOG environment variable.

In my case, I had some fine-grained trace-level statements in the hot loop. This code wouldn’t be executed at runtime if the environment variable was off, and we could in principle expect the CPU’s branch predictor to learn that. In reality, having this unused code in the hot loop still caused performance overhead, perhaps because it takes space in the instruction cache or may prevent other compile-time optimizations.

Fortunately, the log crate supports features to disable logging at compile time.

For example, the max_level_debug feature removes all logging statements beyond the debug level (i.e. all trace-level logs).

Depending on the scenario, this decreased the runtime by 5% to 10%.

[dependencies]

log = { version = "0.4", features = ["max_level_debug"] }

Additionally, the log_enabled! macro allows checking the logging level anywhere in your code, which is useful if you log something that’s expensive to compute.

In my case, I had patterns like if x < y { warn!(...) } which were expensive when using rational numbers (for which a comparison requires a lot of computation).

The total runtime decreased by 40% by adding a log_enabled check before the condition.

Quite a big improvement for a single line of code!

if log_enabled!(Level::Warn) && x < y {

warn!(...)

}

Disabling arithmetic overflow checks (20% faster)

Rust provides a mode to detect arithmetic overflows and panic the program if one occurs unexpectedly. This is good for correctness, but also incurs a performance penalty as a branch is inserted for each operation. In my case, the hot loop was arithmetic-heavy, and disabling overflow checks indeed reduced the runtime by 20%.

However, you may wonder what checks I’m talking about given that overflow checks are disabled by default in release mode, and nobody makes serious benchmarks in non-release mode.

In my case, I re-introduced the checks via a Cargo feature checked_i64 I defined for my crate, and the 20% improvement corresponds to disabling this feature.

fn add(lhs: Integer64, rhs: Integer64) -> Integer64 {

#[cfg(feature = "checked_i64")]

return Integer64(lhs.0.checked_add(rhs.0).unwrap());

#[cfg(not(feature = "checked_i64"))]

return Integer64(lhs.0 + rhs.0);

}

Panic = abort (mixed results)

The next trick – also generally mentioned to reduce the size of Rust binaries – is to change the behavior upon panic!.

When a panic occurs, Rust will by default execute some unwinding code to cleanup resources, display a useful backtrace and potentially catch the panic.

One can change this behavior to simply abort the program, which generates much less code.

[profile.release]

panic = "abort"

Of course, the benchmarks I used were not panicking, so how is that relevant? My intuition is similar to removing the logging code: even if some code is never executed, it can use precious space on the instruction cache.

Results were mixed though: I saw a runtime decrease of 15% to 20% when using big-integer arithmetic, but for other cases the outcome was neutral or even caused a 10% slowdown.

codegen-units = 1 (up to 15%)

By default, Rust splits the compilation of each crate into multiple codegen units to parallelize compilation. This usually makes compilation faster, but may prevent some optimizations between code fragments that are spread across different codegen units. You can set the number of units to 1 to avoid this pitfall.

In my case, this change was neutral for some scenarios but led to a 15% speedup in the best case.

[profile.release]

codegen-units = 1

LTO (up to 5%)

Another common trick is to enable link-time optimization, which allows the compiler to optimize the program globally (rather than only crate-by-crate), at the expense of longer compile times.

[profile.release]

lto = true

In my case, enabling LTO didn’t seem to affect performance much, apart from a 5% speedup in one scenario. This is understandable given that most of the logic in the hot loop is implemented in my crate, and therefore doesn’t benefit from inter-crate optimizations.

PGO (neutral)

I also tried profile-guided optimization (PGO). In principle, this works by first running an instrumented binary to gather a profile of which functions are the most used, and then compiling again with this profile to guide the compiler into better optimizing the code that is called more often.

For my program, I expected that this could at least detect the missed inlining of arithmetic functions (which are small and called very often). However, I didn’t observe any improvement, even for the best case scenario of profiling and then benchmarking on the same input (“overfitting”).

Perhaps my test was too short to gather a sufficiently long profile? Or PGO isn’t a silver bullet? In any case, it’s worth giving a try – PGO has led to performance improvements of up to 16% for the Rust compiler itself.

Targetting the native CPU (neutral)

By default, Rust generates code that works for all CPUs of the current architecture.

However, some families (especially Intel) offer new dedicated instructions on each CPU generation.

The target-cpu option allows the compiler to leverage all the instructions available on a specific CPU model, and notably the native value targets the CPU you’re compiling on.

RUSTFLAGS="-C target-cpu=native" cargo build --release

This can bring significant improvements on some workloads, however it had no noticeable effect in my case.

Switching allocators (neutral)

One last thing that I tried was to use jemalloc rather than the system allocator. Jemalloc is sometimes recommended as a way to improve performance, pitching itself as having “scalable concurrency support”, which seemed applicable to my use case. In particular, my original profiles showed a non-negligible time spent in malloc/free.

This was worth trying in the beginning of my research as it is easy to switch the allocator in Rust with a few lines of code, thanks to the jemallocator crate.

However, this made no noticeable difference.

Presumably, the allocation patterns in my program are quite simple and friendly to the default allocator?

There was anyway much more to gain by improving the layout of data structures to remove most allocations :)

Results

Optimizing this program was quite a journey!

In the end, the choice of the parallelism framework wasn’t the most important for performance. The largest improvements were either algorithmic (writing arithmetic in a better way), related to memory representation (smallvec, data-oriented design) or generic optimizations (e.g. forcing function inlining).

Here is an update of my previous benchmarks, for two versions of my program:

- STV-rs version 0.5.0,

- commit 473bcd6, which incorporates non-released optimizations: the patched

BigIntusing asmallvecbackend, disabled arithmetic overflow checks, disabled trace-level logs,panic=abortandcodegen-units=1– all together these unreleased optimizations make the program twice faster than version 0.5.0.

These benchmarks confirm that:

- Peak performance is achived with one thread per core (i.e. 4 threads), hyper-threading isn’t useful and adding more threads just increases the user and system times.

- Rayon can be 20% slower in wall time and twice slower in user time than custom parallelism on my workload.

- The system time overhead grows significantly with the number of threads using Rayon, while it stays stable and minimal using my custom parallelism implementation.

For reproducibility, these benchmarks were generated with Rust 1.79 on a x86_64 Linux machine.

$ cargo -vV cargo 1.79.0 (ffa9cf99a 2024-06-03) host: x86_64-unknown-linux-gnu os: Debian [64-bit] ...

-

Upon inspecting the assembly, the difference appears to be that the compiler applies a “division by a constant” optimization for types up to

i64, but falls back to a much slower generic division fori128(as provided by the__divti3function): https://rust.godbolt.org/z/Y6Mzffn87. ↩ -

The

tinyveccrate implements a similar pattern, but with a less optimal data layout (due to not using anyunsafecode), so I wouldn’t recommend it (the use ofunsafeis in my opinion justified for such a crate). ↩ -

Indeed, common arithmetic operations (multiplication, division, GCD) on big integers are quadratic in the number of bits (using naive algorithms), and the number of bits doubles for each unreduced multiplication. Therefore, a chain of unreduced operations on rational numbers makes the number of bits grow exponentially to . ↩

Comments

To react to this blog post please check the Mastodon thread, the Lobste.rs thread and the Reddit thread.

You may also like